Ethical Hacking in the Age of AI

- Ethical Hacking

Ethical hackers today have outgrown the limitations of manual testing, rigid checklists, and static scripts. They’ve become AI-powered cyber detectives, operating at the intersection of human and machine intelligence.

Armed with advanced AI models, they can now move faster than ever before, adapt to shifting threat landscapes in real time, and uncover vulnerabilities across complex digital ecosystems that might have once slipped through traditional methods.

How AI Models Are Redefining Ethical Hacking

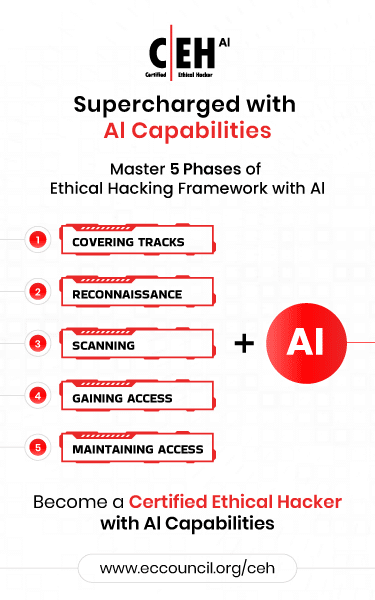

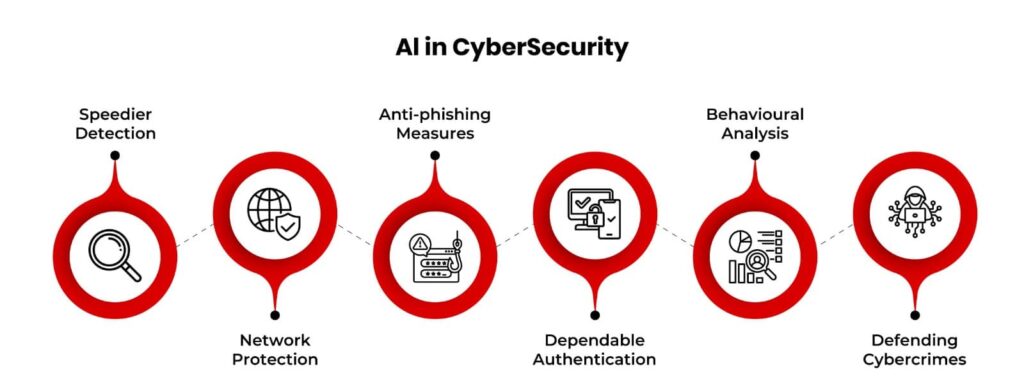

AI, once viewed strictly as a defensive tool for anomaly detection and threat hunting, is now reshaping the offensive playbook. It helps red teams mimic adversarial behavior, simulate social engineering campaigns, generate polymorphic attack payloads, and automate reconnaissance across enterprise networks, cloud platforms, and even AI systems themselves.

AI also enables penetration testers and ethical hackers to scale their operations across thousands of endpoints and cloud assets, using predictive algorithms to prioritize high-risk targets and uncover previously unknown vulnerabilities. With models that learn from data, adapt to countermeasures, and continuously refine attack strategies, the ethical hacker’s role has evolved into one of strategic orchestration rather than brute-force exploration.

The implications are profound: we’re entering a new era where ethical hacking is proactive, AI-powered, and deeply integrated into the development and deployment lifecycle of modern software systems. From red teaming large language models (LLMs) to stress-testing DevSecOps pipelines, today’s ethical hackers are no longer limited by human speed or manual reach. They’re redefining cybersecurity at the very edge of innovation, turning AI into both a weapon and a watchtower in the ongoing fight for digital resilience.

In this AI-driven era, penetration testing, red teaming, and vulnerability assessments have transformed from isolated, time-boxed exercises into continuous, intelligent, and adaptive processes. AI tools can now autonomously map attack surfaces and even generate dynamic payloads that adapt to target responses in real time, often under human oversight. As a result, the ethical hacker’s toolkit is expanding beyond traditional scripts and scanners to include generative models, reinforcement learning agents, and automated reasoning engines.

However, AI is not just safeguarding systems from intrusion; it’s playing both sides of the chessboard. Ethical hackers use AI to defend as well as to emulate advanced threat actors, exploit weaknesses, and stress-test the resilience of both legacy infrastructure and next-generation AI models. This dual-use nature of AI presents both a challenge and an opportunity: a powerful means of enhancing cybersecurity readiness, if wielded with precision, responsibility, and ethical clarity.

What was once a reactive role is now a forward-leaning force in the AI arms race. Ethical hackers must now think like engineers, strategists, and adversaries, augmented by AI, guided by ethics, and driven by the urgency to outpace evolving threats.

As we dive deeper into AI-assisted red teaming, it’s essential to explore not only the capabilities but also the implications, risks, and evolving responsibilities that come with this new form of cyber offense.

AI Is a Game-Changer for Ethical Hackers

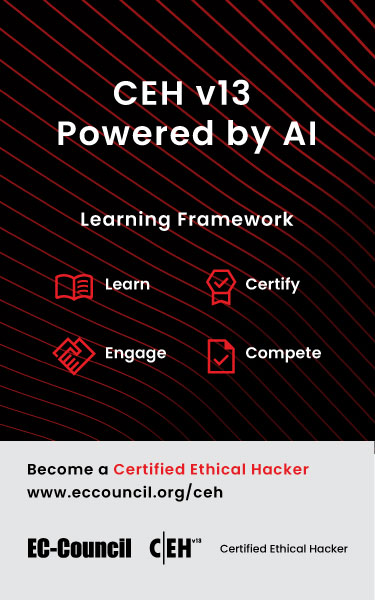

AI is fundamentally reshaping the way security assessments are performed. Traditionally, ethical hacking has required, and continues to require, deep technical expertise, including meticulous manual testing, detailed knowledge of system architectures, and an intimate understanding of known vulnerabilities and exploit chains. Each task, from reconnaissance to reporting, demanded significant time, effort, and specialization. Now, AI is redefining that paradigm.

By automating many of the most time-consuming and repetitive elements of ethical hacking, such as network scanning, asset discovery, and social engineering simulations, AI frees up red teamers to focus on more complex and strategic exploits.

AI models are also highly effective at detecting subtle patterns and anomalies within vast datasets, surfacing hidden vulnerabilities or behavioral inconsistencies that might evade even the most experienced human analyst. This pattern recognition allows ethical hackers to uncover elusive threats, such as privilege escalation chains or API logic flaws, that typically require deep contextual awareness.

More impressively, AI can now assist in generating sophisticated offensive strategies. From crafting targeted phishing campaigns and language-specific pretexts to generating AI-assisted exploit payloads in authorized testing environments and simulating prompt injection attacks against AI systems, these capabilities bring a new level of realism and adaptability to red team operations.

The result is a seismic shift: security testing that is not only faster and broader in scope, but also more adaptive to the constantly evolving threat landscape.

Examples of AI Models Powering Ethical Hacking

ChatGPT for Social Engineering and Red Team Simulations

One of the most prominent examples of AI in ethical hacking is the use of LLMs like ChatGPT to simulate social engineering scenarios and enhance red team operations. These models can rapidly generate convincing, context-aware phishing emails, drawing from publicly available OSINT data to tailor language, tone, and content to specific targets. Red teams can also craft multilingual pretexts for spear-phishing campaigns or test AI-driven customer support systems for vulnerabilities such as prompt injection and jailbreak exploits.

However, LLMs must be handled responsibly; AI-generated content should always remain within the scope of authorized testing to ensure it doesn’t cause real-world harm or cross ethical boundaries.

DeepSeek for Automated Reconnaissance and Vulnerability Discovery

DeepSeek is an open-source, LLM-based ecosystem that can be leveraged in AI-assisted reconnaissance and vulnerability discovery workflows, enabling ethical hackers to perform large-scale OSINT gathering and vulnerability discovery with efficiency.

Designed to automate what would typically require hours of manual effort, AI-assisted reconnaissance workflows can rapidly analyze outputs from scans of domains, subdomains, and public-facing APIs to help map an organization’s attack surface. These workflows can also support the analysis of weak configurations and exposed assets across cloud environments, flagging potential risks that might otherwise go unnoticed.

What sets DeepSeek apart is its ability to intelligently correlate findings, helping red teams prioritize high-impact vulnerabilities over false positives. By streamlining the reconnaissance phase, it allows human operators to shift their focus to more advanced tasks like lateral movement and exploit chaining, accelerating the overall red team workflow without sacrificing depth or accuracy.

AI-Based Fuzzers for Zero-Day Discovery

As AI systems become deeply embedded in enterprise infrastructure, ethical hackers within organizations that deploy them are now tasked with targeting the models themselves. This new frontier of offensive security includes testing LLMs and ML systems for vulnerabilities beyond traditional software flaws, while still accounting for them.

Red teams are now performing adversarial prompt testing to uncover jailbreaks, unauthorized data access, and prompt injection attacks. They’re also assessing bias, fairness, and harmful output across generative models, ensuring that AI systems behave reliably and ethically under pressure. In more advanced scenarios, real-world adversaries may use adversarial input manipulation, such as image perturbations or data poisoning, to manipulate or corrupt model behavior, reinforcing the need for AI security.

The challenge has evolved: it’s no longer just about using AI as a tool for hacking, but about ethically and responsibly hacking AI itself. This requires a new blend of adversarial thinking, model interpretability, and ethical foresight to ensure AI systems are both powerful and safe.

Ethical Considerations

The integration of AI into ethical hacking introduces new ethical challenges that demand careful consideration. While automation enhances speed and efficiency, it also increases the risk of unintended consequences. Without proper oversight, AI-driven tools can cause collateral damage, misidentify targets, overstep testing boundaries, or generate malicious payloads that go beyond their intended scope. Additionally, as AI tools collect and analyze massive datasets, data privacy concerns grow, especially when scraping or processing personal or sensitive information.

To uphold professional integrity, ethical hackers must apply strict ethical discipline when using AI. That means operating only within authorized scopes, never testing systems or data without explicit approval, and maintaining full transparency with clients regarding the AI tools and methods employed. Proper documentation, informed consent, and regular audits are essential. Furthermore, adherence to established frameworks, such as the NIST AI Risk Management Framework (AI RMF) and EC-Council’s Code of Ethics, helps ensure that AI-powered security assessments remain both effective and responsible.

The Future of AI-Powered Ethical Hacking

Rather than sidelining human expertise, AI is amplifying it, enabling red teamers and security professionals to operate with greater speed, precision, and reach than ever before. By merging human creativity and intuition with AI’s computational power, ethical hackers can uncover vulnerabilities that were previously too complex, subtle, or time-consuming to detect.

However, with this enhanced capability comes a clear imperative: responsibility must scale alongside innovation. As AI becomes an integral part of offensive security operations, the community must commit to ethical standards, transparency, and continuous learning. Clear guidelines, responsible tool usage, and ongoing education are essential to ensure AI remains a force for good and not an unchecked risk.

In the hands of well-trained professionals, AI is not just a tool for testing security; it’s a catalyst for building safer, more resilient systems for the future.

References

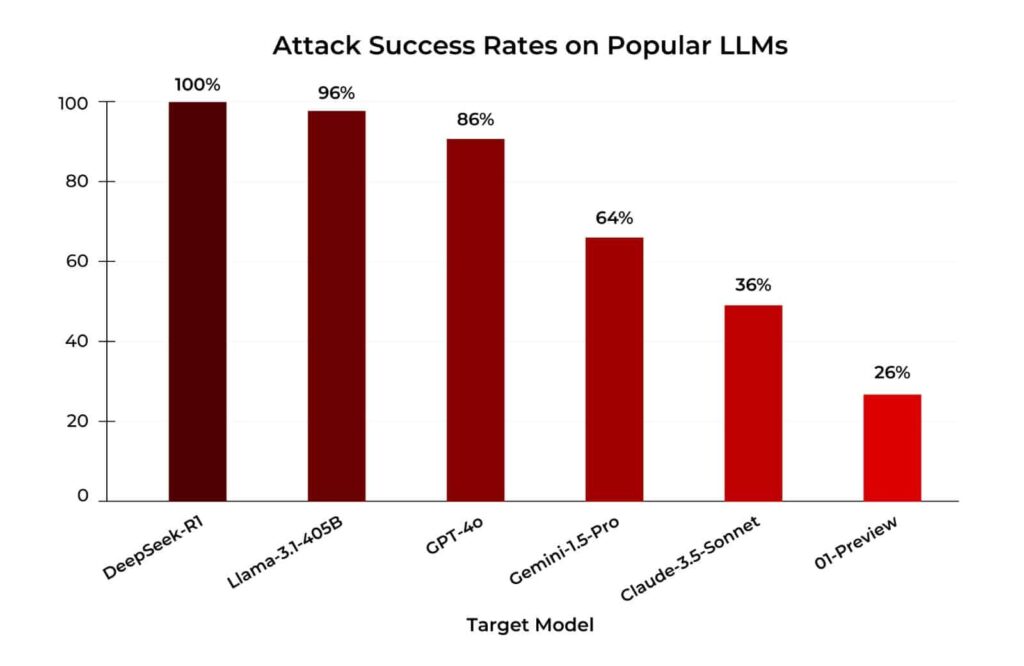

Kassianik, P., & Karbasi, A. (2025, January 31). Evaluating Security Risk in DeepSeek and Other Frontier Reasoning Models. Cisco Blogs. https://blogs.cisco.com/security/evaluating-security-risk-in-deepseek-and-other-frontier-reasoning-models

About the Author

Alexander Reimer

Certified Ethical Hacker (CEH)

Alexander Reimer is a Certified Ethical Hacker (CEH), AI red team specialist, and technical writer at EC-Council. He has led red-teaming projects at Meta Superintelligence Labs, evaluated LLM models at Microsoft, and conducted adversarial testing and AI-driven annotation at Google. Alexander brings a multilingual perspective to cybersecurity, having supported global NLP, red teaming, and prompt engineering efforts across multiple high-profile platforms.

He also serves as a mentor at Udacity for the German and U.S. markets, volunteers as a mentor at ADPList, and contributes regularly to AI security research, training, and community education efforts. Alexander actively supports global knowledge access through Translators without Borders and has translated courses in cybersecurity, AI, and data science for TEDx, Coursera, and Khan Academy.

With a background in IT management, business psychology, and cybersecurity, his current focus is on the intersection of responsible generative AI and cybersecurity.