Certified Offensive AI Security Professional

Master the Tactical Methodology to Hack LLMs and Secure Agentic AI, the Global Command for Offensive Teams

LLMs are vulnerable. Prompt injection bypasses guardrails. Data poisoning corrupts models. This credential validates you can red-team AI systems, exploit vulnerabilities in LLMs and agents, and build defenses that survive real-world attacks.

Attack Vectors

Prompt injection. Data poisoning. Model theft. Attackers are exploiting AI faster than security teams can learn to defend.

Your Credential

Certified Offensive AI Security Professional. Validate you can simulate attacks, find vulnerabilities, and harden AI systems.

Program Inquiry

4.7 on Trustpilot

from 350,000+ trained professionals

Trusted By

AI Red-Teaming Is a New Discipline

Traditional pentesting doesn’t cover LLM vulnerabilities. Prompt injection, data poisoning, and model manipulation require specialized offensive skills. C|OASP is the first credential built specifically for AI red teamers.

The Problem

- Prompt injection impacts 73%+ of production AI deployment#

- No standardized AI red-teaming methodology

- Security teams lack LLM exploitation skills

C|OASP CREDENTIAL VALIDATES

The C|OASP certification equips you to red-team AI systems end-to-end, from prompt injection to model exploitation. Master offensive techniques that break AI before attackers do.

- Master prompt injection, jailbreaking, and guardrail bypass

- Learn OWASP LLM Top 10 & MITRE ATLAS attack chains

- Build AI defenses that survive adversarial testing

Source: * IBM X-Force Threat Intelligence, 2024; ** Palo Alto Networks; # Resecurity, citing OWASP Top 10 for LLM Applications (2025)

Source: * IBM X-Force Threat Intelligence, 2024; ** Palo Alto Networks; # Resecurity, citing OWASP Top 10 for LLM Applications (2025)

Red-team agentic AI systems

Build defenses that survive real attacks

"Prompt injection bypasses every guardrail you've built."

Hack LLMs. Break Agents. Secure AI.

VALIDATE YOUR

LLM EXPLOITATION SKILLS

Over 73% of LLMs are vulnerable to prompt injection.

Traditional pentesting doesn’t cover AI-specific attack vectors. COASP trains you to exploit LLMs, agents, and AI pipelines, then build defenses that actually work.

The Market Problem

- Pentesters don't know how to exploit LLMs or AI agents

- No standardized methodology for AI red-teaming

- Traditional vulnerability scanners miss AI-specific flaws

- SOC teams can't detect AI-powered attacks

- Security architects don't understand AI threat models

Skills This Program Verifies

The COASP credential validates your ability to:

- Execute prompt injection, jailbreaking, and prompt chaining attacks

- Red-team AI agents: memory corruption, tool misdirection, and checkpoint manipulation

- Apply OWASP LLM Top 10 and MITRE ATLAS frameworks

- Conduct adversarial ML attacks: data poisoning, model extraction

- Build detection rules and hardening strategies for AI systems

Organizations need professionals with verified offensive AI skills. This credential proves you have them.

20+

Target Roles

Who is C|OASP Ideal For

C|OASP is designed for security professionals who want to master offensive and defensive AI security techniques.

Offensive Security

Defensive Security

Threat Intelligence

AI/ML Engineering

Security Engineering

AI Security Architecture

The market does not need more AI tools. It needs AI security professionals.

Be the professional who exposes weaknesses in AI systems before attackers do and helps organizations deploy AI securely at scale.

Program Overview

10 comprehensive modules!

Master offensive AI security from reconnaissance to red teaming. The C|OASP certification covers attack methodologies, vulnerability exploitation, and incident response.

Offensive AI and AI System Hacking Methodology

Build a strong foundation in offensive AI security by understanding how AI systems work, where they fail, and how they are attacked, using structured hacking methodologies and globally recognized AI security frameworks.

WHAT YOU WILL LEARN

AI Reconnaissance and Attack Surface Mapping

Learn advanced AI-focused OSINT techniques to identify, enumerate, and analyze AI assets, data pipelines, models, APIs, and attack surfaces, and apply exposure mitigation and hardening strategies to support continuous AI security monitoring.

WHAT YOU WILL LEARN

AI Vulnerability Scanning and Fuzzing

Master AI-specific vulnerability assessment and fuzzing techniques to identify, analyze, and mitigate security weaknesses across modern AI systems and applications.

WHAT YOU WILL LEARN

Prompt Injection and LLM Application Attacks

Analyze and exploit LLM trust boundaries using advanced prompt injection, jailbreaking, and output manipulation techniques, while identifying risks related to sensitive data exposure and insecure LLM application design.

WHAT YOU WILL LEARN

Adversarial Machine Learning and Model Privacy Attacks

Execute and analyze adversarial machine learning, privacy, and model extraction attacks to assess AI system robustness, trustworthiness, and risk, and apply defensive strategies to mitigate them.

WHAT YOU WILL LEARN

Data and Training Pipeline Attacks

Compromise AI systems through data poisoning and backdoor insertion targeting training pipelines and model integrity.

WHAT YOU WILL LEARN

Agentic AI and Model-to-Model Attacks

Analyze and exploit autonomous AI agents and multi-model architectures by targeting excessive agency, cross-LLM interactions, orchestration workflows, and unbounded resource consumption while understanding defensive strategies for securing agentic systems.

WHAT YOU WILL LEARN

AI Infrastructure and Supply Chain Attacks

Explore offensive techniques targeting AI infrastructure, system integrations, and third-party dependencies, while learning how to identify, exploit, and harden AI supply chain weaknesses.

WHAT YOU WILL LEARN

AI Security Testing, Evaluation, and Hardening

Apply structured AI security testing and evaluation methodologies to assess risk, validate controls, and implement hardening best practices across enterprise AI systems.

WHAT YOU WILL LEARN

AI Incident Response and Forensics

Master AI-specific incident response and forensics, concluding with hands-on engagement in AI red team activities.

WHAT YOU WILL LEARN

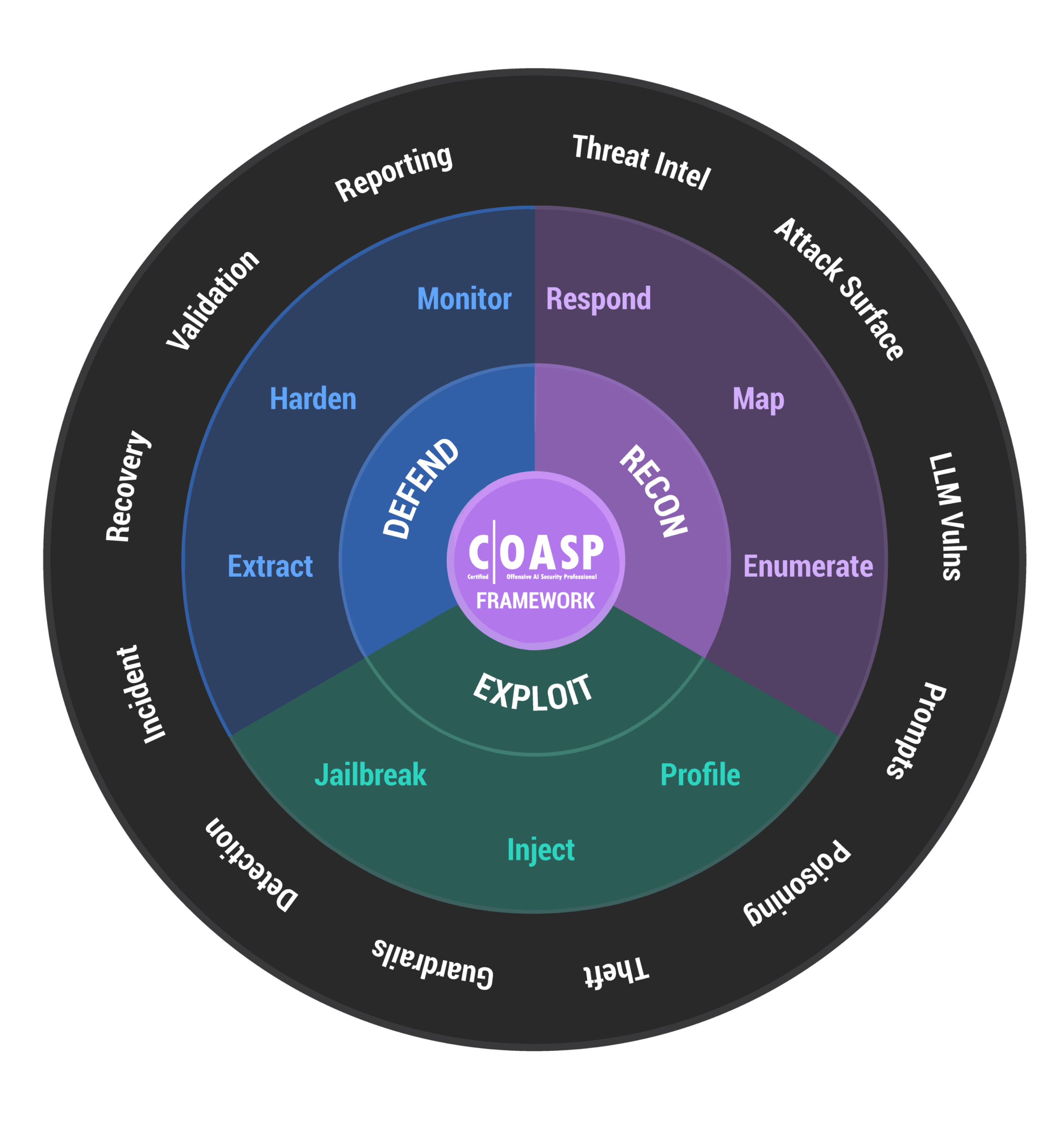

Offensive AI

Security

Methodology

From reconnaissance to exploitation, testing to hardening, there is a systematic approach to securing AI systems against adversarial threats.

This framework equips you to think like an attacker and defend like an expert.

01 RECON

Map AI system architectures, enumerate exposed endpoints, and build threat models. Profile training pipelines, data flows, and inference APIs to identify where defenses are weakest.

02 EXPLOIT

Execute prompt injection, jailbreaking, data poisoning, and model extraction attacks to validate AI system weaknesses and document exploitable gaps

03 DEFEND

Implement guardrails, detection mechanisms, and incident response procedures to harden AI systems and ensure resilient, secure deployments

C|OASP

Framework

The Threat Landscape

WHY TRADITIONAL

SECURITY

FAILS AGAINST AI

AI systems introduce novel attack vectors that traditional security tools and methodologies cannot detect or prevent. Understanding these threats is the first step to defending against them.

Prompt Injection

Attackers manipulate LLM inputs to bypass safety guardrails and extract sensitive data or execute unauthorized actions.

Model Extraction

Data Poisoning

Jailbreaking

Hands-On AI Offensive Security Techniques

RED-TEAM.

EXPLOIT.

DEFEND.

Master the offensive techniques that break AI systems before attackers do. From prompt injection to model extraction, learn to think like an adversary and defend like an engineer.

Multi-Protocol Reconnaissance

Enumerate AI-related endpoints across REST and gRPC services

Telemetry Analysis to Map AI Decision Boundaries

Analyze model outputs to reverse-engineer decision logic and thresholds

API Reconnaissance

Discover and map AI API endpoints, parameters, and authentication mechanisms

AI Reconnaissance via Model Fingerprinting

Identify AI models, versions, and configurations through behavioral analysis

Transfer, Boundary & Noise Attacks

Perform black-box adversarial attacks across AI model architectures

PGD Attacks on Audio Models

Deploy gradient attacks on audio classification and transcription models

Cross-LLM Attacks

Assess and exploit attack vectors in cross-LLM systems

API Reconnaissance & Model Extraction

Discover AI API endpoints and extract model weights from exposed infrastructure

RAG Poisoning Attacks

Inject malicious content into retrieval-augmented generation pipelines

PGD Attacks on Image Classifiers

Execute gradient-based adversarial attacks on computer vision models

FGSM & PGD Attacks on Image Classifiers

Execute gradient-based adversarial attacks on computer vision models

Atheris

AFL

CleverHans

ART

Foolbox

Alibi Detect

TFDV

Fairlearn

IBM AI Fairness 360

PyRIT

Burp Suite

OWASP ZAP

Prompt Fuzzer

ToolFuzz

Tensorfuzz

FuzzyAI

FuzzyAI

AI SECURITY EXPERTS EVERYWHERE

Every sector needs AI security experts!

With 87% of organizations facing AI-driven attacks, offensive security skills are mission-critical. C|OASP certifies you to red-team AI systems and defend against adversarial threats across every sector.

$28.6B¹

GLOBAL AI-ENHANCED BREACH LOSSES

Finance & Banking

AI fraud model hardening, LLM chatbot security, regulatory compliance testing

38.9%²

TECH EMPLOYEE AI ADOPTION

Technology

Enterprise LLM security, RAG pipeline hardening, AI DevSecOps integration

HIPAA

COMPLIANCE CRITICAL

Healthcare

Medical AI red-teaming, HIPAA-compliant security testing, clinical AI validation

DCWF

ALIGNED CURRICULUM

Government

DoD AI security frameworks, critical infrastructure protection, counter-adversarial ML

85%³

CONSIDER ADVERSARIAL AI A TOP SECURITY PRIORITY

Defense

Aerospace security, military systems, supply chain protection

Sources: ¹Market.Biz; ²Cyberhaven 2025; ³Gitnux

Threat Analysis

AI Security Monitoring

Network Defense

Infrastructure Protection

Red Team Ops

Offensive Security Testing

Prepares You For

The C|OASP certification opens doors to cutting-edge roles in offensive AI security, adversarial research, and AI risk management.

Offensive AI Security

- AI Red Team Specialist/Adversarial AI Engineer

- Offensive Security Engineer (AI/LLM Focus)

- Adversarial AI Security Analyst

AI Research & Analysis

- Adversarial Machine Learning Researcher

- AI Threat Hunter/AI Security Analyst

- AI Malware & Exploit Analyst

AI Incident & Testing

- AI Incident Response Engineer

- AI Test & Evaluation Specialist

- Cyber Threat Intelligence (CTI) Analyst – AI Focus

AI Engineering & Ops

- Secure AI Engineer/AI Security Architect

- ML Ops/AI Ops Security Specialist

- LLM Systems Engineer

AI Risk & Assurance

- AI Model Risk Specialist

- AI Risk & Assurance Specialist

- AI Risk Advisor/Consultant

AI Security Leadership

- Security Program Manager (AI Security)

- AI Product Security Manager

THE AI SECURITY GAP

ORGANIZATIONS

CAN'T SECURE

AI SYSTEMS

AI attacks are evolving faster than defenses, and most organizations lack offensive security expertise to test their AI systems.

Enterprises need certified AI security professionals who can red team LLMs, exploit vulnerabilities, and harden AI systems before attackers strike.

87% of organizations faced AI driven attacks in 2024.¹ OWASP warns that LLMs have 10 critical vulnerability categories most teams do not test.²

Sources: ¹ IBM X-Force Threat Intelligence, 2024; ² OWASP LLM Top 10, 2024

The Security Gap Organizations Face

- Companies deploy AI but cannot identify adversarial vulnerabilities

- Security teams understand networks but not AI-specific attack vectors

- ML engineers build models but do not red-team their own systems

- Result: AI deployments without security testing = breach waiting to happen

What This Credential Validates

Verify the skills that make you the AI leader organizations need:

- This credential validates your ability to red-team AI systems

- Verified skills in prompt injection, jailbreaking, and model exploitation

- Credential proves confidence testing enterprise AI defenses

- Industry-recognized proof of offensive AI security expertise

- Validation that you can find vulnerabilities before attackers do

What Your Organization Gets

Solve the AI security crisis:

- Identify AI vulnerabilities before production deployment

- Build robust defenses against adversarial AI attacks

- Protect LLMs and AI agents from exploitation

- Clear security validation for AI investments

OFFENSIVE AI SECURITY SALARY DATA

What AI Security Professionals Are Earning in 2026

Demand for professionals who can simulate adversarial attacks, test AI systems, and defend enterprise AI continues to outpace supply.

$175K

Average Salary (US)

28K+

Open Positions

AI Security Engineer

$183,000

Median salary

Range: $140,000 – $210,000

Source: Glassdoor.com

AI Engineer

$140,000

Median salary

Range: $112,000 – $178,000

Source: Glassdoor.com

AI Data Engineer

$112,000

Median salary

Range:$99,000 – $136,000

Source: 6figr.com

Senior Machine Learning Engineer

$164,000

Median salary

Range: $121,000 – $207,000

Source: Payscale.com

Organizations will pay premium salaries for professionals who can solve the AI security crisis. C|OASP makes you that solution.

AI Security Salary Data

What AI Security Pros Are Earning in 2026

Demand for professionals who can simulate adversarial attacks, test AI systems, and defend enterprise AI continues to outpace supply.

Adversarial AI Security Analyst

$188,000

Median salary

Range: $146,000 – $230,000

Adversarial Machine Learning Researcher

$173,000

Median salary

Range: $125,000 – $221,000

AI Threat Hunter / AI Security Analyst

$133,500

Median salary

Range: $113,600 – $153,500

AI Incident Response Engineer

$138,500

Median salary

Range: $103,000 – $174,000

Secure AI Engineer / AI Security Architect

$234,000

Median salary

Range: $179,000 – $289,000

Source: Glassdoor.com

AI Model Risk Specialist

$140,500

Median salary

Range: $105,000 – $176,000

Source: Glassdoor.com

AI Risk & Assurance Specialist

$140,500

Median salary

Range: $105,000 – $176,000

Source: 6figr.com

Security Program Manager (AI Security)

$183,000

Median salary

Range: $146,000 – $220,000

AI Product Security Manager

$195,000

Median salary

Range: $151,000 – $239,000

*Note All salary information is based on aggregated market data from publicly available sources and reflects US estimates. Actual salaries may vary based on location, education and other qualifications, skills showcased during the interview, and other factors.

C|OASP PROGRAM FAQs

FREQUENTLY

ASKED

QUESTIONS

What is the Certified Offensive AI Security Professional (C|OASP) certification?

C|OASP is EC-Council’s offensive AI security program designed for cybersecurity professionals who must think like attackers and defend AI like engineers. It trains you to red-team LLMs, exploit AI systems, and defend enterprise AI before attackers do.

Who should take the C|OASP certification?

C|OASP is ideal for red-team and blue-team professionals, SOC analysts, penetration testers, AI/ML engineers, DevSecOps specialists, and compliance managers responsible for AI safety in regulated industries like finance, healthcare, and defense.

What this program covers?

This program covers prompt injection attacks, model extraction and theft, training data poisoning, agent hijacking, LLM jailbreaking, and defensive engineering techniques. The curriculum is aligned with industry frameworks, including OWASP LLM Top 10, NIST AI RMF, and ISO 42001.

Do I need prior cybersecurity experience?

Yes, this program requires foundational cybersecurity knowledge. This is not a beginner course, it’s hands-on offensive security training for professionals who already understand security fundamentals.

What's included in the certification?

The program includes 10 comprehensive modules, hands-on adversarial labs with real AI systems, DCWF-aligned learning paths, certification exam, lifetime access to materials, and access to the AI security community.

"*" indicates required fields